Beyond Delivery: Realizing AI’s Potential Across the Value Stream

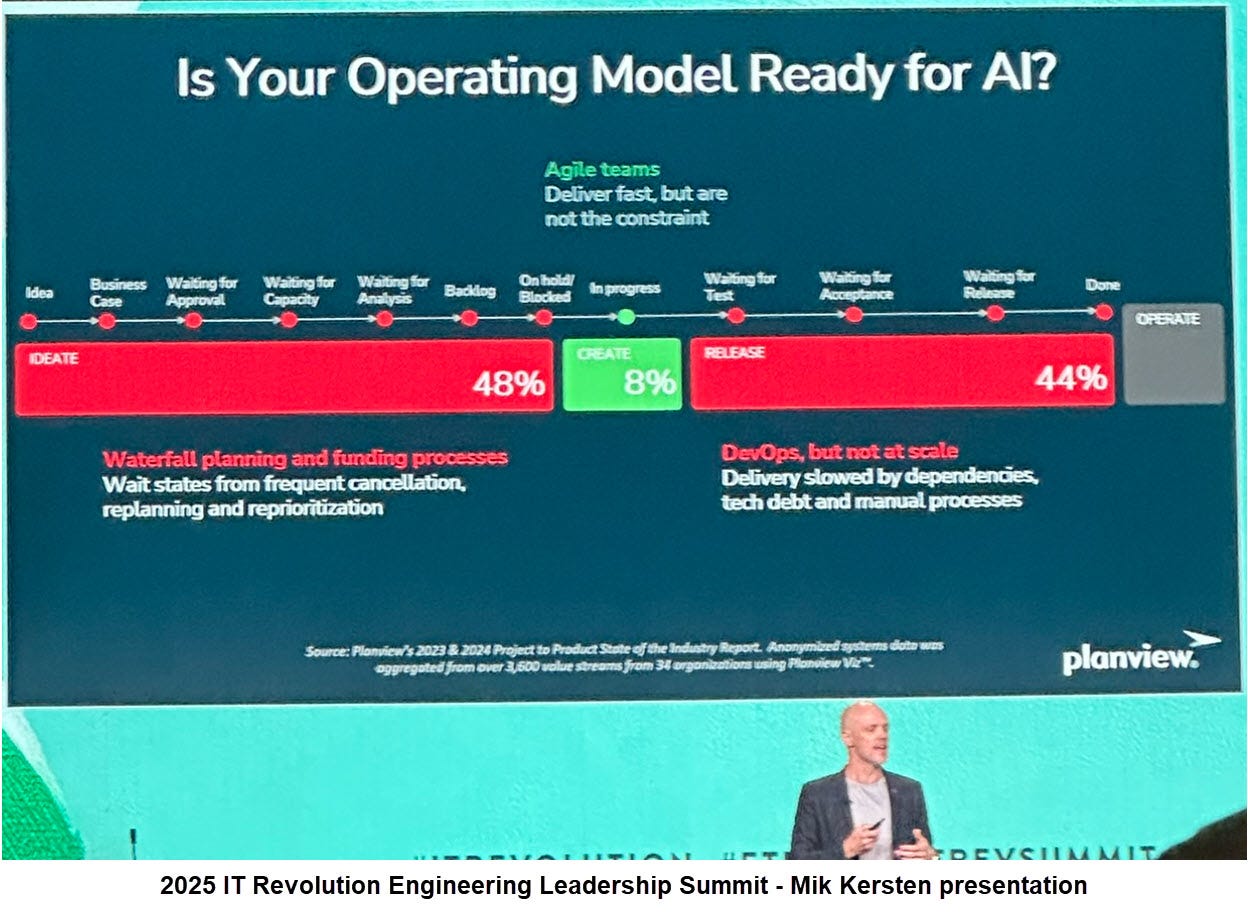

At the 2025 Engineering Leadership Tech Summit, Mik Kersten previewed ideas from his upcoming book, Output to Outcome: An Operating Model for the Age of AI. He reminded us of a truth often overlooked in digital transformation: Agile delivery teams are not the constraint in most cases.

Kersten broke out the software value stream into four phases: Ideate, Create, Release, Operate, and showed how the majority of waste and delay happens outside of coding. One slide in particular resonated with me. Agile teams accounted for just 8% of overall cycle time. The real delays sat at the bookends: 48% in ideation, slowed by funding models, approvals, and reprioritizations; and 44% in release, bogged down by dependencies, technical debt, and manual processes.

This framing raises a critical question: if we only apply AI to coding or delivery automation, are we just accelerating the smallest part of the system while leaving the actual bottlenecks untouched?

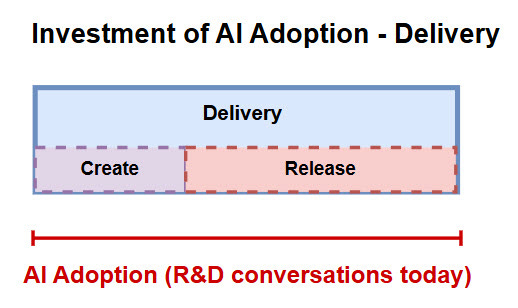

AI in the Delivery Stage: Where the Industry Stands

In a recent DX Engineering Enablement podcast, Laura Tacho and her co-hosts discussed the role of AI in enhancing developer productivity. Much of their discussion centered on the Create and Release stages: code review, testing, deployment, and CI/CD automation. Laura made a compelling point about moving beyond “single-player mode”:

“AI is an accelerant best when it’s used at an organizational level, not when we just put a license in the hands of an individual… Platform teams can own a lot of the metaphorical AI headcount and apply it in a horizontal way across the organization.”

Centralizing AI adoption and applying it across delivery produces leverage, rather than leaving individuals to experiment in isolation. But even this framing is still too narrow.

The Missing Piece: AI Adoption Across the Entire Stream

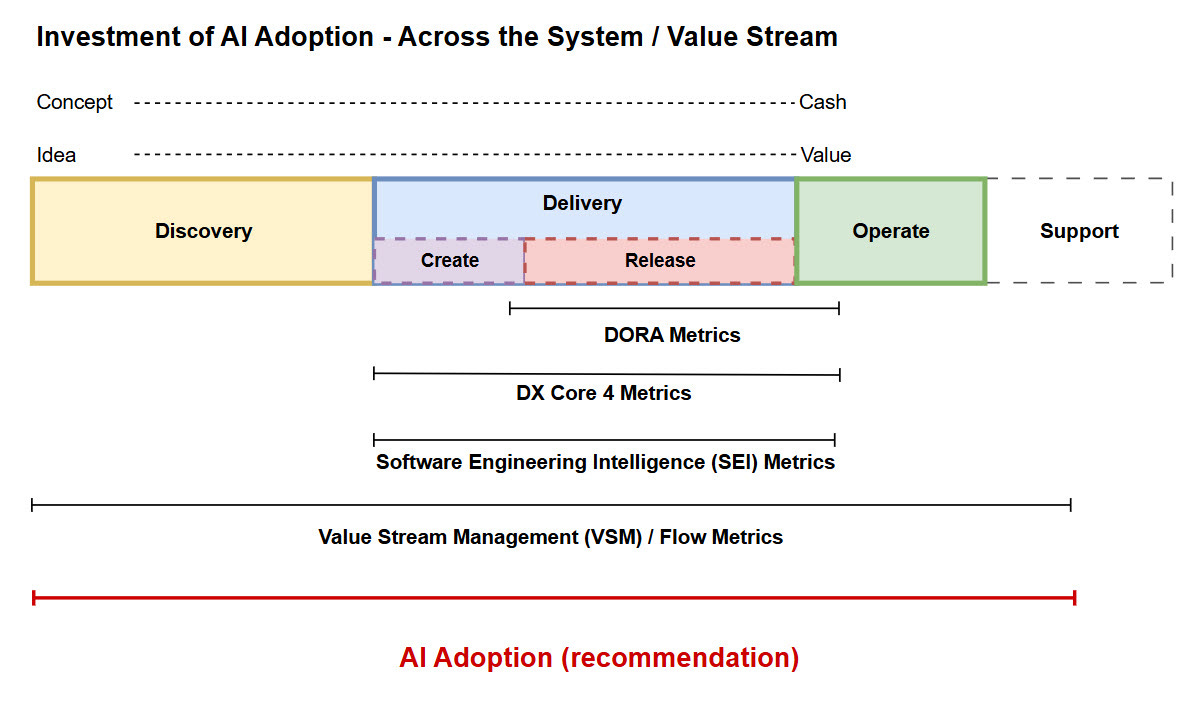

The real opportunity is to treat AI not as a tool for delivery efficiency, but as a partner across the entire value stream. That means embedding AI into every stage and measuring it with system-level visibility, not just delivery dashboards.

This is why I value platforms that integrate tool data across the whole stream, system metrics and visibility dashboards, rather than tools that stop at delivery.

Of course, full-stream visibility platforms are more expensive, and in many organizations, only R&D teams are driving efforts to improve flow. As I’ve argued in past writing on SEI vs. VSM, context matters: sometimes the right starting point is SEI, when delivery is the bottleneck. But when delays span ideation, funding, or release, only a VSM platform can expose and address systemic waste.

AI opportunities across the stream:

Ideation (48%) – Accelerate customer research, business case drafting, and approvals; surface queues and wait states in one view.

Create (8%) – Apply AI to coding, reviews, and testing, but tie it to system outcomes, not vanity speedups.

Release (44%) – Automate compliance, dependency checks, and integration work to reduce handoff delays.

Operate – Target AI at KTLO and incident patterns, feeding learnings back into product strategy.

When AI is applied across the whole system (value stream), we can ask a better question: not “How fast can we deploy?” but “How much can we compress idea-to-value?” Moving from 180 days to 90 days or less becomes possible when AI supports marketing, product, design, engineering, release, and support, and when the entire system is measured, not just delivery.

VSM vs. Delivery-Only Tooling

This is where tooling distinctions matter. DX Core 4 and SEI platforms, such as LinearB, focus on delivery (Create and Release), which is valuable but limited to one stage of the system. Planview Viz and other VSM platforms, by contrast, elevate visibility across the entire value stream.

Delivery-only dashboards may show how fast you’re coding or deploying. But Value Stream Management reveals the actual business constraints, often upstream in funding, prioritization, PoCs, and customer research, or downstream in handoffs and release.

Without that lens, AI risks becoming just another tool that speeds up developers without improving the system.

AI as a Force Multiplier in Metrics Platforms

AI embedded directly into metrics platforms can change the game. In a recent Product Thinking podcast, John Cutler observed:

“We talked to a company that’s spending maybe $4 million in staff hours per quarter around just people spending time copying and prepping for all these types of things… All they’re doing is creating a dashboard, pulling together a lot of information, and re-contextualizing it so it looks the same in a meeting. I think that’s just a massive opportunity for AI to be able to help with that kind of stuff.”

This hidden cost of operational overhead is real. Leaders and teams waste countless hours aggregating and reformatting data into slides or dashboards to make it consumable.

Embedding AI into VSM or SEI platforms removes that friction. Instead of duplicating effort, AI can generate dashboards, surface insights, and even facilitate the conversations those dashboards are meant to support.

This is more of a cultural shift than a productivity gain. Less slide-building, more strategy. Less reformatting, more alignment. And metrics conversations that finally scale beyond the few who have time to stitch the story together manually.

The ROI Lens: From Adoption to Efficiency

The ROI of AI adoption is no longer a question of whether to invest; that decision is now a given. As Atlassian’s 2025 AI Collaboration Report shows, daily AI usage has doubled in the past year, and executives overwhelmingly cite efficiency as the top benefit.

The differentiator now is how efficiently you manage AI’s cost, just as the cloud debate shifted from whether to adopt to how well you could optimize spend.

But efficiency cannot be measured by isolated productivity gains. Atlassian found that while many organizations report time savings, only 4% have seen transformational improvements in efficiency, innovation, or work quality.

The companies breaking through embed AI across the system: building connected knowledge bases, enabling AI-powered coordination, and making AI part of every team.

That’s why the ROI lens must be grounded in flow metrics. If AI adoption is working, we should see:

Flow time shrink

Flow efficiency rises

Waste reduction is visible in the stream

Flow velocity accelerates (more items delivered at the same or lower cost)

Flow distribution rebalance (AI resolving technical debt and reducing escaped defects)

Flow load stabilization (AI absorbing repetitive work and signaling overload early)

VSM system-wide platforms make these signals visible, showing whether AI is accelerating the idea-to-value process across the entire stream, not just helping individuals move faster.

Bringing It Full Circle

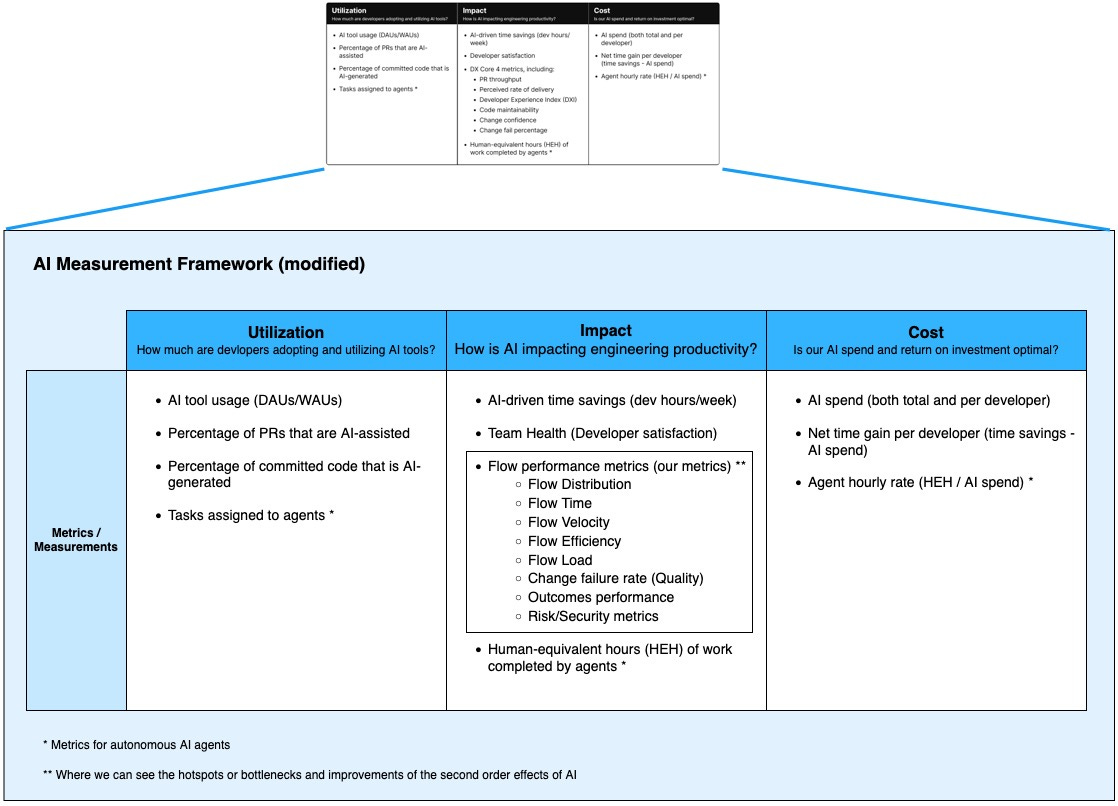

In recent conversations with a large organization’s CTO, and again with Laura while exploring how DX and Anthropic measure AI, I kept returning to the same point: we already have the metrics to know if AI is making an impact. AI is now just another option or tool in our toolbox, and its effect is reflected in flow metrics, change failure rates, and developer experience feedback.

We are also beginning to adopt DX AI Framework metrics, which are structured around Utilization, Impact, and Cost, aligning with the metrics that companies like Dropbox and Atlassian currently measure. But even as we incorporate these, we continue to lean on system-level flow metrics as the foundation. They are what reveal whether AI adoption is truly improving delivery across the value stream, from ideation to production.

Leadership Lessons from McKinsey and DORA

This perspective also echoes Ruba Borno, VP at AWS, in a recent McKinsey interview on leading through AI disruption. She noted that while AI’s pace of innovation is unprecedented, only 20–30% of proofs of concept reach production. The difference comes from data readiness, security guardrails, leadership-driven change management, and partnerships.

And the proof is tangible: Canva, working with AWS Bedrock, moved from the idea of Canva Code to a launched product in just 12 weeks. That’s precisely the kind of idea-to-operation acceleration we need to measure. It shows that when AI is applied systematically, you don’t just make delivery faster; you also make the entire flow from concept to customer measurably shorter.

The 2025 DORA State of AI-Assisted Software Development Report reinforces this reality. Their cluster analysis revealed that only the top performers, approximately 40% of teams, currently experience AI-enhanced throughput without compromising stability. For the rest, AI often amplifies existing dysfunctions, increasing change failure rates or generating additional waste.

Leadership Implications: What the DORA Findings Mean for You

The 2025 DORA report indicates that only the most mature teams currently benefit from AI-assisted coding. For everyone else, AI mostly amplifies existing problems. What does that mean if you’re leading R&D?

1. Don’t skip adoption, but don’t roll it out unthinkingly.

AI is here to stay, but it’s not a silver bullet. Start small with teams that already have strong engineering practices, and use them to build responsible adoption patterns before scaling.

2. Treat AI as an amplifier of your system.

If your flow is healthy, AI accelerates it. If your flow is dysfunctional, AI makes it worse. Think of it like a turbocharger: great when the engine and brakes are tuned, dangerous when they’re not.

3. Use metrics to know if AI is helping or hurting.

Flow time, efficiency, and distribution should improve.

DORA’s stability metrics (such as change failure rate) should remain steady or decline.

Developer sentiment should show growing confidence, not frustration.

4. Fix bottlenecks in parallel.

AI won’t remove waste; it will expose it faster. Eliminate approval delays, reduce tech debt, and streamline release processes so AI acceleration actually creates value.

5. Value of the message:

The lesson isn’t “don’t adopt AI.” It’s: adopt responsibly, measure outcomes, and strengthen your system so that AI becomes an accelerant, not a liability.

Ruba’s message, reinforced by both McKinsey and DORA, leads to the same conclusion: AI adoption succeeds when it’s measured at the system level, tied to business outcomes, and championed by leadership. Without that visibility, organizations risk accelerating pilots that never translate into value.

Conclusion: Beyond Delivery

The conversation about AI in software delivery is maturing. It’s no longer just about adoption, but about managing costs and system impact. AI must be measured not only by its utilization but also by how it improves flow efficiency, compresses the idea-to-value cycle, and reduces systemic waste.

The organizations that will win in this new era are those that:

Embed AI across the entire value stream, not just in delivery.

Measure ROI through flow metrics that connect improvements to business outcomes.

Manage AI’s cost as carefully as they once managed cloud costs.

Lead with visibility, change management, and partnerships to scale adoption.

And critically, successful AI integration requires more than deploying tools. It requires thoughtful measurement, training, and best practices for implementation in software engineering to sustain quality while ensuring that training and strategy are applied consistently across all roles, from product and design to operations and support. Only then can organizations ensure that the promise of acceleration improves outcomes without undermining the collaboration and sustainability that long-term software success depends on.

In short: AI in delivery is helpful, but AI across the value stream is transformational.

References

Atlassian. (2025). How leading companies unlock AI ROI: The AI Collaboration Index. Atlassian Teamwork Lab. Retrieved from https://atlassianblog.wpengine.com/wp-content/uploads/2025/09/atlassian-ai-collaboration-report-2025.pdf

Borno, R., & Yee, L. (2025, September). How to lead through the AI disruption. McKinsey & Company, At the Edge Podcast (transcript). Retrieved from https://www.mckinsey.com

Cutler, J. (2025, September 23). Product Thinking: Freeing Teams from Operational Overload [Podcast]. Episode 247. Apple Podcasts.

DX, Engineering Enablement Podcast. (2025). Episode excerpt on AI’s role in developer productivity and platform teams. DX. (Quoted in article from Laura Tacho). Episode 90,

DX (Developer Experience). (2025). Measuring AI code assistants and agents: The DX AI Measurement Framework™. DX Research, co-authored by Abi Noda and Laura Tacho. Retrieved from https://getdx.com (Image: DX AI Measurement Framework).

Kersten, M. (2025). Output to Outcome: An Operating Model for the Age of AI (forthcoming). Presentation at the 2025 Engineering Leadership Tech Summit.

Google Cloud & DORA (DevOps Research and Assessment). (2025). 2025 State of AI-Assisted Software Development Report. Retrieved from https://cloud.google.com/devops/state-of-devops

Further Reading

For readers interested in exploring AI ideas further, here are a few related pieces from my earlier writing:

AI in Software Delivery: Targeting the System, Not Just the Code

AI Is Improving Software Engineering. But It’s Only One Piece of the System

Decoding the Metrics Maze: How Platform Marketing Fuels Confusion Between SEI, VSM, and Metrics

This article was originally published on September 30, 2025.