Leading Through the AI Hype in R&D

Note: AI is evolving rapidly, transforming workflows faster than expected. Most of us can't predict how quickly or to what level AI will change our teams or workflow. My focus for this post is on the current state, pace of change, and the reality vs hype at the enterprise level. I promote the adoption of AI and encourage every team member to embrace it.

I’ve spent the past few weeks deeply immersed in “vibe coding” and experimenting with agentic AI tools during my nights and weekends, learning how specialized agents can orchestrate like real product teams when given proper context and structure. But in my day job as a senior technology leader, the tone shifts. I’ve found myself in increasingly chaotic meetings with senior leaders, chief technology officers, chief product officers, and engineering VPs, all trying to out-expert each other on the transformative power of AI on product and development (R&D) teams.

The energy often feels like a pitch room, not a boardroom. Someone declares Agile obsolete. Another suggests we can replace six engineers with AI agents. A few toss around claims of “30× productivity.” I listen, sometimes fascinated, often frustrated, at how quickly the conversation jumps to conclusions without asking the right questions. More troubling, many of these executives are under real pressure from investors and ownership to show ROI. If $1M is spent on AI adoption, how do we justify the return? What metrics will we use to report back?

Hearing the Hype (and Feeling the Exhaustion)

One executive confidently declared, “Agile and Lean are dead,” citing the rise of autonomous AI agents that can plan, code, test, and deploy without human guidance. His opinion echoed a recent blog post, Agile Is Dead: Long Live Agentic Development, which criticized Agile rituals like daily stand-ups and sprints as outdated and encouraged teams to let agents take over the workflow¹. Meanwhile, agile coaches argue that bad Agile, not Agile itself, is the real problem, and that AI can strengthen Agile if applied thoughtfully.

The hype escalates when someone shares stories of high-output engineering from one of the senior developers, keeping up with AI capabilities: 70 AI-assisted commits in a single night, barely touching the keyboard. Another proposes shrinking an 8-person team to just two engineers, one writing prompts and one overseeing quality, as the AI agents do the rest. These stories are becoming increasingly common, especially as research suggests that AI can dramatically reduce the number of engineers needed for many projects². Elad Gil even claimed most engineering teams could shrink by 5×–10×.

But these same reports caution against drawing premature conclusions. They warn that while AI enables productivity gains, smaller teams risk creating knowledge silos, reduced quality, and overloading the remaining developers². Other sources echo this risk: Software Engineering Intelligence (SEI) tools have flagged increased fragility and reduced clarity in AI-generated code when review practices and documentation are lacking³.

What If We’re Already Measuring the Right Things?

While executives debate whether Agile is dead, I find myself thinking: we already have the tools to measure AI’s impact, we just need to use them.

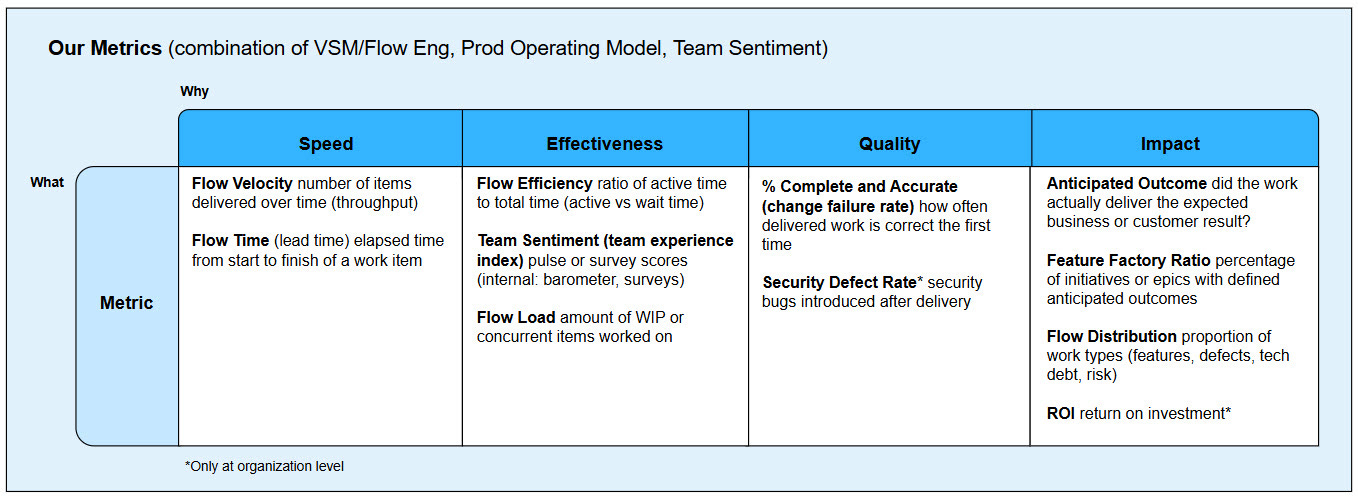

In my organization’s division, we’ve spent years developing a software delivery metrics strategy centered on Value Stream Management, Flow Metrics, and team sentiment. These metrics already show how work flows through the system, from idea to implementation to value. They include:

Flow metrics like distribution, throughput, time, efficiency, and load

Quality indicators like change failure rate and security defect rate

Sentiment and engagement data from team surveys

Outcome-oriented metrics like anticipated outcomes and goal (OKR) alignment

Recently, I aligned our Flow Metrics with the DX Core 4 Framework⁴ matrix, organizing them into four key categories: speed, effectiveness, quality, and impact. We made these visual and accessible, using this clear chart to show how each metric relates to delivery health. These metrics don’t assume Agile is obsolete or that AI is the solution. They track how effectively our teams are delivering value.

So when senior leaders asked, “How will we measure AI’s impact?” I reminded them, we already are. If AI helps us move faster, we’ll see it in flow time. If it increases capacity, we’ll see it in throughput (flow velocity). If it maintains or improves quality, our defect rates and sentiment scores will reflect that. The same value stream lens that shows us where work gets stuck will also reveal whether AI helps us unstick it.

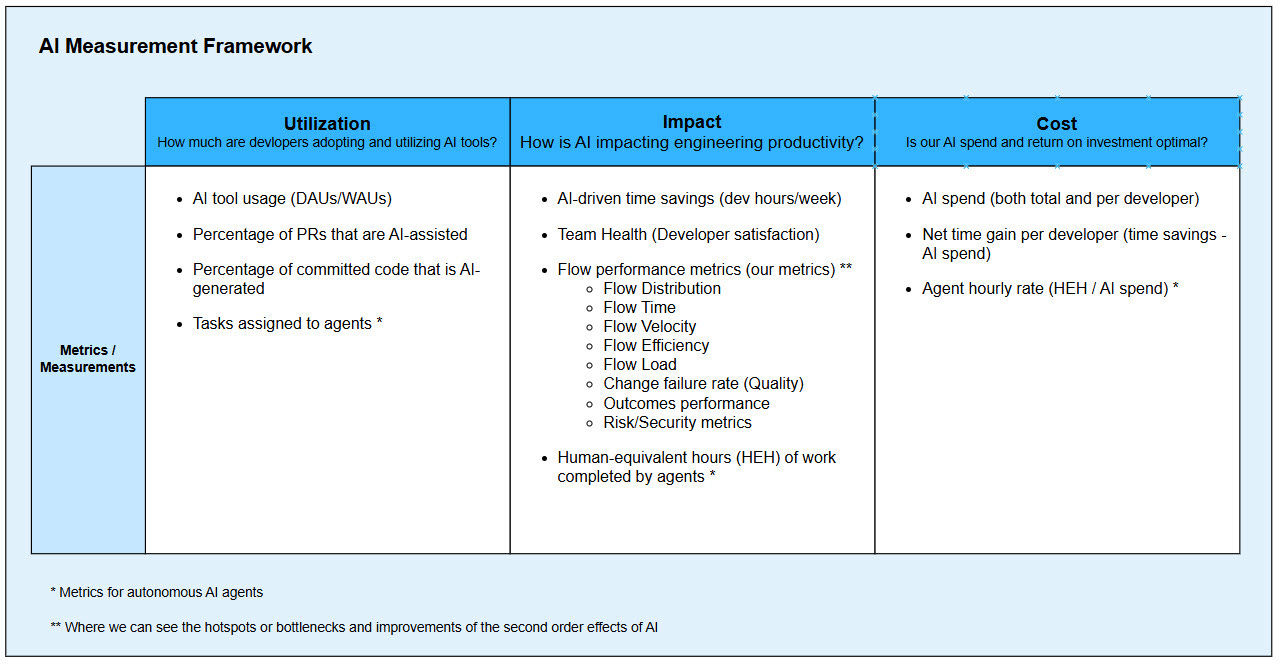

Building on Existing Metrics: The AI Measurement Framework

Instead of creating an entirely new system, I layered an existing AI Measurement Framework on top of our existing performance metrics⁵. This format includes three categories:

Utilization:

% of AI-generated code

% of developers using AI tools

Frequency of AI-agent use per task

Impact:

Changes in flow metrics (faster cycle time)

Developer satisfaction or frustration

Delivered value per team or engineer

Cost:

Time saved vs. licensing and premium token cost

Net benefit of AI subscriptions or infrastructure

This approach answers the following questions: Are developers using AI tools? Does that usage make a measurable difference? And does the difference justify the investment?

In a recent leadership meeting, someone asked, “What percentage of our engineers are using AI to check in code?” That’s an adoption metric, not a performance one. Others have asked whether we can measure AI-generated commits per engineer to report to the board. While technically feasible with specific developer tools, this approach risks reinforcing vanity metrics that prioritize motion over value. Without impact and ROI metrics, adoption alone can lead to gaming behavior, and teams might flood the system with low-value tasks to appear “AI productive.” What matters is whether AI is helping us delivery better, faster, and smarter.

I also recommend avoiding vanity metrics, such as lines of code or commits. These often mislead leaders into equating motion with value. Many vendors boast “AI wrote 50% of our code,” but as developer-experience researcher Laura Tacho explains, this usually counts accepted suggestions, not whether the code was modified, deleted, or even deployed.⁵ We must stay focused on outcomes, not outputs.

The Risk of Turning AI into a Headcount Strategy

One of the more concerning trends I’m seeing is the concept of “headcount conversion,” which involves reducing team size and utilizing the savings to fund enterprise AI licenses. If seven people can be replaced by two and an AI license, along with a premium token budget, some executives argue, then AI “pays for itself.” However, this assumes that AI can truly replace human capability and that the work will maintain its quality, context, and business value.

That might be true for narrow, repeatable tasks, or small organizations or startups struggling with costs and revenue. But it’s dangerous to generalize. AI doesn’t hold tribal knowledge, coach junior teammates, or understand long-term trade-offs. It’s not responsible for cultural dynamics, systemic thinking, or ethical decisions.

Instead of shrinking teams, we should consider expanding capacity. AI can help us do more with the same people. Developer productivity research indicates that engineers typically reinvest AI-enabled time savings into refactoring, enhancing test coverage, and implementing cross-team improvements², which compounds over time into stronger, more resilient software.

Slowing Down to Go Fast

Leaving those leadership meetings, I felt a mix of energy and exhaustion. Many people wanted to appear intelligent, but few were asking thoughtful questions. We were racing toward solutions without clarifying what problem we were solving or how we’d measure success.

So here’s my suggestion: Let’s slow down. Let’s agree on how we’ll track the impact of AI investments. Let’s integrate those measurements into systems we already trust. And let’s stop treating AI as a replacement for frameworks that still work; instead, let’s use it as a powerful tool that helps us deliver better, faster, and with more intention.

AI isn’t a framework. It’s an accelerator. And like any accelerator, it’s only valuable if we’re steering in the right direction.

References

Leschorn, J. (2025, May 29). Agile Is Dead: Long Live Agentic Development. Superwise. https://superwise.ai/blog/agile-is-dead-long-live-agentic-development/

Ameenza, A. (2025, April 15). The New Minimum Viable Team: How AI Is Shrinking Software Development Teams. https://anshadameenza.com/blog/technology/ai-small-teams-software-development-revolution/

Circei, A. (2025, March 13). Measuring AI in Engineering: What Leaders Need to Know About Productivity, Risk and ROI. Waydev. https://waydev.co/ai-in-engineering-productivity-risk-roi/

Saunders, M. (2025, January 6). DX Unveils New Framework for Measuring Developer Productivity. InfoQ. https://www.infoq.com/news/2025/01/dx-core-4-framework/

GetDX. (2025). Measuring AI Code Assistants and Agents. DX Research. https://getdx.com/research/measuring-ai-code-assistants-and-agents/

The article was originally published on July 27, 2025.