Smarter Pull Requests: Balancing AI, Automation, and Human Review

Best practices for AI-enhanced pull requests, combining rules, templates, CI checks, and human review to deliver faster, safer code

Author’s Note

This handbook began as a PDF. I used Cursor to help me convert it into a GitHub repository project/document. While I might reference Microsoft Copilot and Claude Code for AI-assisted implementation, the focus here is on AI pull request assistants, such as:

GitHub Copilot for Pull Requests – generates AI review comments, PR summaries, and change explanations. It’s useful, but like the others below, it is just one piece of the bigger picture.

CodeRabbit – delivers AI-powered PR summaries and line-by-line feedback.

AI Code Review (GitHub Action) – runs an AI model on each PR and posts intelligent review comments.

PR-Agent (by Qodo) – open-source tool that integrates with GitHub and GitLab to review, summarize, and improve PRs.

The examples lean on GitHub Actions for the delivery pipeline, though almost everything here can be adapted to other AI tools or CI systems (GitLab, CircleCI, Jenkins, etc.). Think of this less as a one-size-fits-all recipe and more as a set of patterns you can tailor to your stack.

AI can generate code at unprecedented speed, but accountability for what goes into production still rests with humans. This raises a new challenge: how do we ensure the quality, security, performance, and resilience of what enters production when code can be created faster than ever?

This guide explores how to integrate AI responsibly into the pull request process, using rules files, standardized templates, automation, and CI enforcement, while keeping human reviewers focused on design, correctness, and risk.

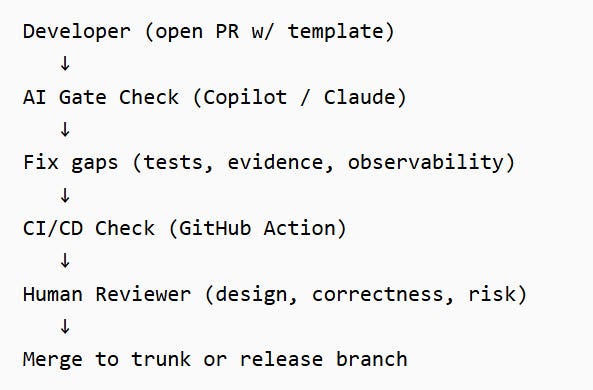

It proposes a framework for AI-augmented pull requests (PRs) that combines:

Rules files for context and consistency

An evidence-based PR template

AI pull request checks (such as GitHub AI PR Review tools, Copilot, or Claude Code) to surface gaps and propose fixes

CI enforcement to block merges if Core rules aren’t satisfied

Human review focused on design, correctness, and business alignment

This is not a set of coding best practices or AI coding instructions. It is specifically focused on pull request practices, where AI tools assist in review, validation, and context management.

Introduction

AI can help us write code faster, but this guide is not about AI writing code. It’s about AI assisting in the pull request process to ensure higher quality reviews. Today, and for the near future, AI does not carry responsibility for what runs in production. That accountability remains with developers and teams.

When throughput increases, small gaps in the review process become large risks: inconsistent PRs, checklist fatigue, missing links to tests or observability, and late integration surprises. AI can unintentionally worsen this by enabling more partially validated changes to reach review.

This handbook proposes suggested practices for PRs that raise the floor without slowing delivery:

Rules as guidelines: Core rules apply everywhere; supporting and domain-specific rules (e.g., architecture, observability, NASA “Power of 10”) layer on when relevant.

Evidence over assertion: A PR template that requires links to tests, scans, dashboards, and rollback plans.

AI + CI assist, humans decide: Copilot and Claude highlight gaps, CI enforces Core rules, and humans evaluate design quality, correctness, and risk.

Delivery-model aware: Works for both trunk-based delivery and batch releases, with extra release-level validation where needed.

The goal is to standardize within reason and establish clear, paved paths that teams can adopt, ensuring quality, security, and resilience are consistent across the organization.

A Spark of Inspiration

This handbook was inspired by an AI adoption all-hands discussion. In its wake, I shared an early draft of the handbook internally with our teams to offer an option to consolidate scattered conversations about AI in PRs.

At that time, more GitHub AI-assisted PR reviews and comments were showing up, but no single wiki or README explained how to integrate AI consistently into pull requests. The intent was simple:

If similar content already exists elsewhere, this can be ignored. However, if not, some parts of it can still be valuable. The goal is to provide guidelines, support standardization (within reason), and create paved paths others can benefit from.

That spirit continues here: not prescriptive rules, but a framework that others can adapt.

Why PR Automation Matters

PRs are the critical checkpoint between individual work and shared code. With AI accelerating output, weaknesses in the PR process become more visible:

Inconsistent PRs – some provide detailed evidence, others say “fixed bug” with no links.

Checklist fatigue – reviewers repeat “Did you add tests? Where’s the migration plan?”

Missing context – no links to test runs, coverage, or dashboards.

Integration risk – batch releases expose conflicts late.

Automation addresses these by:

Standardizing expectations

Automating validation of mechanics

Requiring evidence (links, not assertions)

Scaling across delivery models

Ensuring auditability

The Framework

Rules Files

Core rules (

.ai/core-rules.md) are universal.Supporting rules (architecture, observability, flags, etc.) apply when relevant.

Domain-specific rules (e.g., NASA “Power of 10”) may be adopted in specialized contexts.

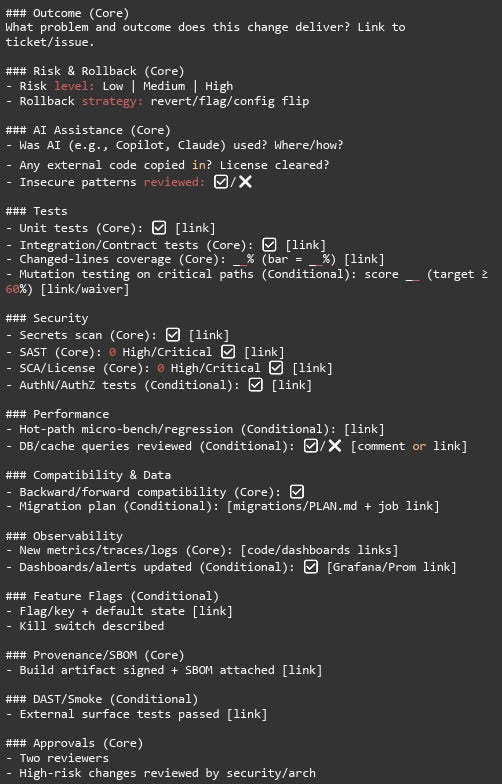

PR Template

A standardized PR template ensures evidence over assertions:

Outcome & risk/rollback plan

AI assistance disclosure

Links to unit/integration tests, coverage %

Links to security scans (SAST, SCA, secrets)

Observability metrics & dashboards

Feature flag configs & rollback toggles

AI Gate Checks

Copilot: Summarizes diffs, fills PR template, highlights missing links.

Claude Code: Auto-loads

CLAUDE.md; commands like/check-pr,/add-observability,/migration-plan.

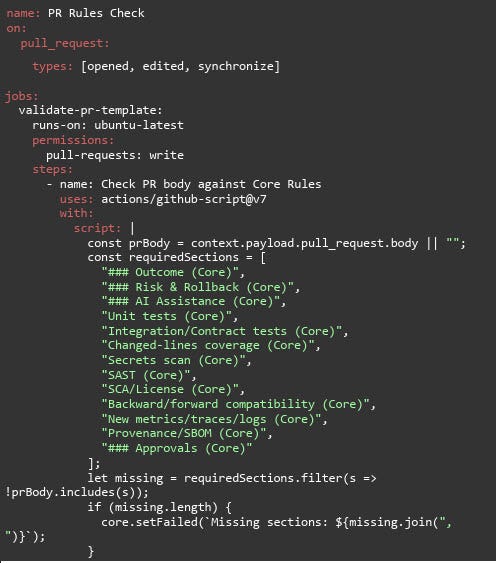

GitHub Action: PR Rules Check

CI Enforcement

GitHub Actions validate that Core sections exist, placeholders are filled, and evidence is linked. Merges are blocked until fixed.

Human Review

Automation raises the floor. Humans still:

Evaluate design and architecture.

Confirm correctness and business fit. (Note: once business documentation is part of the AI context, AI can help with this as well).

Assess readability, maintainability, and tradeoffs.

Delivery Models

Trunk-Based Delivery

Each PR is a release candidate.

Core rules enforced at the PR level.

Batch Release Trains

PR-level rules still apply.

Additional release-level validation:

Manifest listing features, risks, and rollback plans

Integrated regression + performance tests

Migration coordination across features

Staged rollout with auto-abort thresholds

Example: Day in the Life of a PR

Developer opens PR → template fills automatically.

Runs

/check-prin Claude → surfaces missing test link & observability metric.Adds links to unit/integration tests, coverage report, CodeQL scan, Grafana dashboard.

Pushes update → GitHub Action validates Core rules.

Human reviewer now focuses on API design, domain model, and risk.

PR merges with confidence.

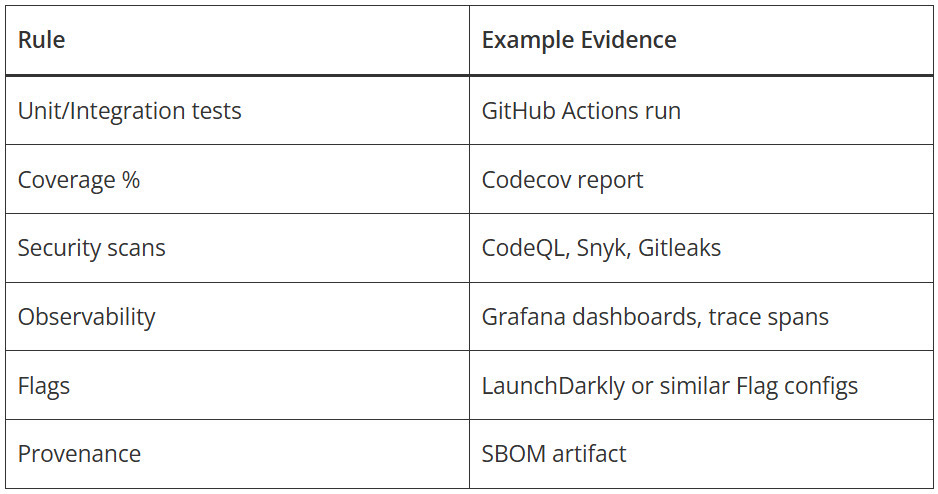

Evidence Links Table

Workflow Diagram

Resources (complete versions of the handbook)

Want the deep dive? Grab one of the complete handbook versions below.

GitHub repository with source (clone/fork)

GitHub Pages version for easy navigation

Conclusion

AI speeds up coding, but responsibility for production code remains with humans. By embedding rules, templates, AI gate checks, CI enforcement, and human judgment into PRs, organizations can keep pace with AI while preserving accountability.

This handbook is a starting point: a framework of guidelines to help identify areas of standardization and clear paths forward that teams at all levels of an organization can benefit from.

References

Perforce. NASA’s 10 Rules for Developing Safety-Critical Code. https://afterburnout.co/p/ai-promised-to-make-us-more-efficient

This article was originally published on September 28, 2025.